WTM2101- DNN & HMM model for Speech Recognition

Speech recognition is a technology that converts human speech into understandable text or commands. Its principle is to recognize words, phrases, or complete sentences by analyzing the acoustic features in the speech signal. Speech recognition technology typically involves three main steps: speech signal preprocessing, feature extraction, and application of a speech recognition model.

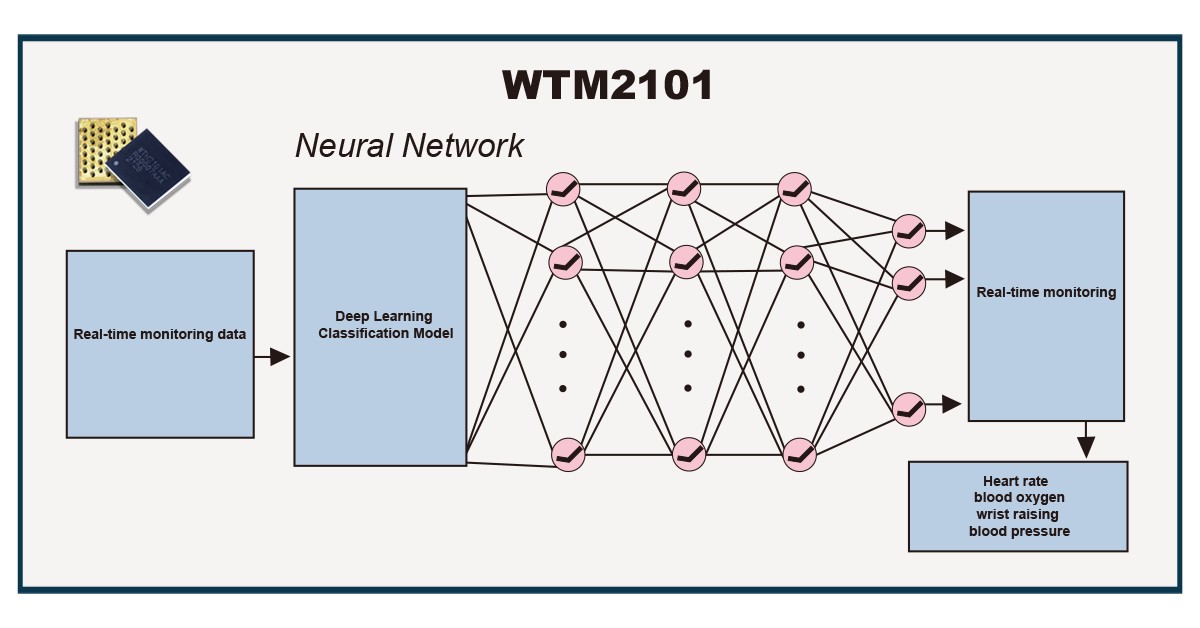

The principle of speech recognition models is mainly based on deep learning technology. The basic idea is to convert the speech signal into corresponding text or commands, and learn the mapping relationship between speech signals and text through model training, thereby achieving the task of speech recognition.

The WTM2101 chip adopts a computing in memory architecture, which integrates memory and processors in the same unit, thereby achieving efficient data processing and model inference.

In speech recognition, both DNN and HMM models play important roles. The DNN model is mainly used for feature extraction and classification of speech signals, while the HMM model is used for temporal modeling and recognition of speech signals. The WTM2101 chip combines the advantages of the two models organically by using the advantages of the computing in memory architecture.

Specifically, the working process of WTM2101 chip in speech recognition is as follows:

Front-end processing of speech signals: The speech signal is processed through filtering, MFCC feature extraction, and other processing steps to convert it into a corresponding feature vector sequence.

Feature extraction of DNN model: The feature vector sequence is used as input, and feature extraction and classification are performed through the DNN model to obtain the high-level representation of the speech signal.

Temporal modeling of HMM model: The output of the DNN model is used as input, and temporal modeling is performed through the HMM model to obtain the temporal distribution of the speech signal.

Recognition and decoding: Dynamic programming and other algorithms are used to recognize and decode the output of the HMM model to obtain the final text output result.

The WTM2101 chip has the advantages of low power consumption, high accuracy, and fast feature extraction and temporal modeling through the efficient processing capability of the storage-computing integrated architecture. It is widely used in speech recognition and natural language processing fields.

In the WTM2101 chip, speech recognition technology based on the computing in memory architecture can be applied to scenarios such as voice command control. By using speech recognition technology, devices can be controlled by voice commands, such as turning on/off lights, opening/closing windows, etc. The speech recognition in the WTM2101 chip has multiple functions, including but not limited to the following aspects:

l Wake-up word detection: The built-in speech wake-up engine of the WTM2101 chip can analyze environmental sounds in real-time and quickly recognize wake-up words to activate the speech recognition function of the speaker.

l Voice command recognition: The speech recognition engine in the WTM2101 chip can recognize various types of user voice commands, including music control, device control, Q&A, etc. Users can easily control the speaker through voice commands, improving the interaction experience and convenience of use.

l Sound enhancement: The built-in sound enhancement algorithm in the WTM2101 chip can achieve functions such as noise reduction, echo suppression, and adaptive gain control of speech signals, improving the clarity and stability of speech signals, thereby improving speech recognition accuracy and user experience.

Currently, WTM2101 speech recognition function is applied to voice command word wake-up and recognition functions in smart homes, smart glasses, and other devices. It is expected to have more extensive applications in the future.

https://en.witmem.com/news/product_news1/speech_recognition_chip.html

评论

发表评论